【ElasticSearch】大数据量情况下的前缀、中缀实时搜索方案

简述

业务开发中经常会遇到这样一种情况,用户在搜索框输入时要实时展示搜索相关的结果。要实现这个场景常用的方案有Completion Suggester、search_as_you_type。那么这两种方式有什么区别呢?一起来了解下。

环境说明:

数据量:9000w+

es版本:7.10.1

脚本执行工具:kibana

Completion Suggester和search_as_you_type的区别

1.Completion Suggester是基于前缀匹配、且数据结构存储在内存中,超级快,缺点是耗内存

2.search_as_you_type可以是前缀、中缀匹配,可以很快,但是要选好查询方式

3.Api调用方式不同,Completion Suggester是通过Suggest语句查询,search_as_you_type和常规查询方式一致

举个栗子

如何实现前缀匹配需求

使用Completion Suggester,示例如下:

- 创建索引

PUT /es_demo

{

"mappings": {

"properties": {

"title_comp": {

"type": "completion",

"analyzer": "standard"

}

}

}

}

- 初始化数据

POST _bulk

{"index":{"_index":"es_demo","_id":"1"}}

{"title_comp": "愤怒的小鸟"}

{"index":{"_index":"es_demo","_id":"2"}}

{"title_comp": "最后一只渡渡鸟"}

{"index":{"_index":"es_demo","_id":"3"}}

{"title_comp": "今天不加班啊"}

{"index":{"_index":"es_demo","_id":"4"}}

{"title_comp": "愤怒的青年"}

{"index":{"_index":"es_demo","_id":"5"}}

{"title_comp": "最后一只996程序猿"}

{"index":{"_index":"es_demo","_id":"6"}}

{"title_comp": "今日无事,勾栏听曲"}

- 查询DSL

通过前缀查询,查找以“愤怒”开头的字符串

GET /es_demo/_search

{

"suggest": {

"title_suggest": {

"prefix": "愤怒",

"completion": {

"field": "title_comp"

}

}

}

}

- 查询代码demo

@SpringBootTest

public class SuggestTest {

@Autowired

private RestHighLevelClient restHighLevelClient;

@Test

public void testComp() {

List<Map<String, Object>> list = suggestComplete("愤怒");

list.forEach(m -> System.out.println("[" + m.get("title_comp") + "]"));

}

public List<Map<String, Object>> suggestComplete(String keyword) {

CompletionSuggestionBuilder completionSuggestionBuilder = SuggestBuilders.completionSuggestion("title_comp");

completionSuggestionBuilder.size(5)

//跳过重复的

.skipDuplicates(true);

SuggestBuilder suggestBuilder = new SuggestBuilder();

suggestBuilder.addSuggestion("suggest_title", completionSuggestionBuilder)

.setGlobalText(keyword);

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.suggest(suggestBuilder);

SearchRequest searchRequest = new SearchRequest("es_demo").source(searchSourceBuilder);

try {

SearchResponse response = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);

CompletionSuggestion completionSuggestion = response.getSuggest().getSuggestion("suggest_title");

List<Map<String, Object>> suggestList = new LinkedList<>();

for (CompletionSuggestion.Entry.Option option : completionSuggestion.getOptions()) {

Map<String, Object> map = new HashMap<>();

map.put("title_comp", option.getHit().getSourceAsMap().get("title_comp"));

suggestList.add(map);

}

return suggestList;

} catch (IOException e) {

throw new RuntimeException("ES查询出错");

}

}

}

查询结果:

[愤怒的小鸟]

[愤怒的青年]

如何实现中缀匹配需求

使用search_as_you_type,此处提供了hanlp_index和standard两种分词器的字段示例。示例如下:

- 创建索引

PUT /es_search_as_you_type

{

"mappings": {

"properties": {

"title": {

"type": "text",

"fields": {

"han": {

"type": "search_as_you_type",

"analyzer": "hanlp_index"

},

"stan": {

"type": "search_as_you_type",

"analyzer": "standard"

}

}

}

}

}

}

- 初始化数据

POST _bulk

{"index":{"_index":"es_search_as_you_type","_id":"1"}}

{"title": "愤怒的小鸟"}

{"index":{"_index":"es_search_as_you_type","_id":"2"}}

{"title": "最后一只渡渡鸟"}

{"index":{"_index":"es_search_as_you_type","_id":"3"}}

{"title": "今天不加班啊"}

{"index":{"_index":"es_search_as_you_type","_id":"4"}}

{"title": "愤怒的青年"}

{"index":{"_index":"es_search_as_you_type","_id":"5"}}

{"title": "最后一只996程序猿"}

{"index":{"_index":"es_search_as_you_type","_id":"6"}}

{"title": "今日无事,勾栏听曲"}

- 查询DSL

GET /es_search_as_you_type/_search

{

"query": {

"match": {

"title.stan": {

"query": "的小",

"operator": "and"

}

}

}

}

- 查询代码demo

@SpringBootTest

public class SuggestTest {

@Autowired

private RestHighLevelClient restHighLevelClient;

@Test

public void testSearchAsYouType() {

List<Map<String, Object>> list = suggestSearchAsYouType("的小");

list.forEach(m -> System.out.println("[" + m.get("title") + "]"));

}

public List<Map<String, Object>> suggestSearchAsYouType(String keyword) {

//这里使用了search_as_you_type的2gram字段,可以根据自己需求调整配置

MatchQueryBuilder matchQueryBuilder = matchQuery("title.stan._2gram", keyword).operator(Operator.AND);

//需要返回的字段

String[] includeFields = new String[]{"title"};

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder()

.query(matchQueryBuilder).size(5)

.fetchSource(includeFields, null)

.trackTotalHits(false)

.trackScores(true)

.sort(SortBuilders.scoreSort());

SearchRequest searchRequest = new SearchRequest("es_search_as_you_type").source(searchSourceBuilder);

try {

SearchResponse response = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);

org.elasticsearch.search.SearchHits hits = response.getHits();

List<Map<String, Object>> suggestList = new LinkedList<>();

for (org.elasticsearch.search.SearchHit hit : hits) {

Map<String, Object> map = new HashMap<>();

map.put("title", hit.getSourceAsMap().get("title").toString());

suggestList.add(map);

}

return suggestList;

} catch (IOException e) {

throw new RuntimeException("ES查询出错");

}

}

}

查询结果:

[愤怒的小鸟]

分词器说明

查看分词结果的方式

第一种

指定分词器

GET _analyze

{

"analyzer": "standard",

"text": [

"愤怒的小鸟"

]

}

第二种

指定使用某个字段的分词器

POST es_search_as_you_type/_analyze

{

"field": "title.stan",

"text": [

"愤怒的青年"

]

}

hanlp_index和standard分词器的区别

standard分词器

- 默认会过滤掉符号

- 中文以单个字为最小单位,英文则会以空格符或其他符号或中文分隔作为一个单词

例:

GET _analyze

{

"analyzer": "standard",

"text": [

"愤怒的小鸟"

]

}

分词结果:

{

"tokens" : [

{

"token" : "愤",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<IDEOGRAPHIC>",

"position" : 0

},

{

"token" : "怒",

"start_offset" : 1,

"end_offset" : 2,

"type" : "<IDEOGRAPHIC>",

"position" : 1

},

{

"token" : "的",

"start_offset" : 2,

"end_offset" : 3,

"type" : "<IDEOGRAPHIC>",

"position" : 2

},

{

"token" : "小",

"start_offset" : 3,

"end_offset" : 4,

"type" : "<IDEOGRAPHIC>",

"position" : 3

},

{

"token" : "鸟",

"start_offset" : 4,

"end_offset" : 5,

"type" : "<IDEOGRAPHIC>",

"position" : 4

}

]

}

hanlp_index分词器

- 默认不会过滤符号

- 通过语义等对字符串进行分词,会分出词语

例:

GET _analyze

{

"analyzer": "hanlp_index",

"text": [

"愤怒的小鸟"

]

}

分词结果:

{

"tokens" : [

{

"token" : "愤怒",

"start_offset" : 0,

"end_offset" : 2,

"type" : "a",

"position" : 0

},

{

"token" : "的",

"start_offset" : 2,

"end_offset" : 3,

"type" : "ude1",

"position" : 1

},

{

"token" : "小鸟",

"start_offset" : 3,

"end_offset" : 5,

"type" : "n",

"position" : 2

}

]

}

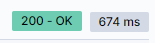

生产实践中的查询情况

基本都是几百毫秒就解决。ps:如果一条数据字段很多,最好只返回几个需要的字段即可,否则数据传输就要占用较多时间。

总结

当然,无论是Completion Suggester还是search_as_you_type的查询配置方式都还有很多,例如Completion Suggester的Context Suggester,search_as_you_type的2gram、3gram,还有查询类型match_bool_prefix、match_phrase、match_phrase_prefix等等。各种组合起来都会产生不同的效果,笔者这里只是列举出一种还算可以的方式。关于其他的查询类型和配置如何使用以及分别是怎么工作的,下次有空再聊聊。

官方文档链接

https://www.elastic.co/guide/en/elasticsearch/reference/7.10/search-as-you-type.html